Why Your Atmos Mix Will Sound Different On Apple Music

In this article Edgar Rothermich gives a detailed explanation of the differences between Dolby Atmos and Apple’s Spatial Audio and all the steps you have to take to audition a Spatial Audio rendering of your Atmos mix!

Before getting into the details, let's look at the following situation:

Imagine you mix a simple pop song with drums, bass, guitars, vocals. You put a small room on the drums with a 1s reverb time, you add a different reverb on the vocals, let's say 2s, and for the guitar, you create a nice 4s spacey effect. You balance everything perfectly during the mix while monitoring it over your stereo speakers. Finally, the mix is done, gets uploaded to the Apple Music streaming service, and when you check the song over your speakers, it sounds exactly how you mixed it. However, when you listen over headphones (because that is how 80% of the audience is listen to your song) you realized that Apple has removed all the various reverbs in your mix and replaced them with a single 1.5s reverb on the drums, the vocals, the guitars, and even the bass, that you left dry. CUT. You wake up drenched in sweat and realize it was only a dream, only a nightmare ... hold that thought.

"Support For Dolby Atmos" ... Really?

Dolby Atmos has been around for ten years now, but mainly as a film sound format. Dolby Atmos Music, however, was only introduced in 2019, and the first mixes were done ‘behind the scenes’ by a handful of mixing engineers in a handful of studios that were equipped for mixing in that new immersive sound format. When Apple entered the arena in June 2021 with their "Support for Dolby Atmos" by adding thousands of songs mixed in Dolby Atmos to their Apple Music streaming service (at no extra cost to the subscribers), it sent a ripple effect through the audio production community. Mixing studios were crunching the numbers to find out if it was feasible to upgrade to Dolby Atmos to stay competitive, and mixing engineers asked themselves if they had to step out of the comfort of their stereo field and enter the wild wild west of mixing in Dolby Atmos.

Now that more and more engineers have started to embrace that uncharted territory of Dolby Atmos Music, there is a sizeable rumble going through the audio production community. Is it possible that there was a major oversight in the production chain with Dolby Atmos, maybe a fine print that we didn't read? Slowly, information came out that was confirmed by engineers who had the pleasure to listen to their Atmos mixes on the Apple Music streaming service.

The Binaurally Rendered version that you listen to (and fine-tune) when mixing in Dolby Atmos sounds different when that Atmos mix is played back on Apple Music. That means all the people who listen to your Atmos mix on Apple Music over headphones (the majority), don't hear the mix the way you created it in the studio. WTF! However, this time you can't wake up from a nightmare because this nightmare is for real.

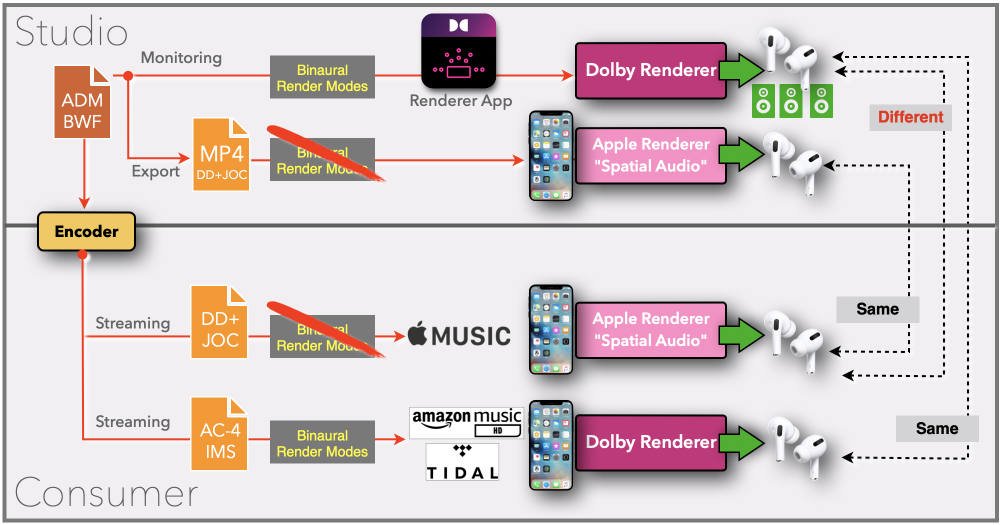

Look at the following diagram where I try to illustrate the problem. If it looks complicated, then you are right; it is. Make sure that before you start your Dolby Atmos Music mix, you understand what is going on here.

In this article, I will try to decipher that in the following twelve sections.

View fullsize

1 - A Blessing and a Curse

Dolby Atmos has two key elements, described by the two buzz-words, "Immersive" and "Object-based". Immersive means that, instead of a 2-dimensional sound field, for example, quad, 5.1, or 7.1, Dolby Atmos can deliver a 3-dimensional sound field. That is nothing new, and you could just mount a few speakers on the ceiling, add some channels to your surround format, and you are ready to go immersive.

However, the real advantage of Dolby Atmos is the move from a traditional "channel-based format" to an "object-based format", which creates some challenges regarding monitoring our Dolby Atmos mixes as we will see.

When we mix in stereo or surround, we have a specific number of speakers that we use to monitor during the mix, and when the end consumer listens to that mix, they will have the same number of speakers. Let's ignore for a moment the variables like mono speakers or various downmix mechanisms.

But, when we mix in Dolby Atmos, we have no clue what speaker layout the end consumer has when listening to our Dolby Atmos mix. The big selling point for the Dolby Atmos format, "create and distribute one file that can adapt to any speaker layout during playback", becomes a big headache for the mixing engineer. What speaker layout do I choose for monitoring when creating my Dolby Atmos mix if there are so many playback scenarios with the end consumer? It is not as easy as with the stereo, where you press the Mono button to check the mono compatibility of a stereo mix. You would need multiple "compatibility buttons", one for each format (7.1.2, 5.1, 7.1, stereo, binaural, etc.), representing the various options that a consumer might listen to when enjoying your mix. That is actually the reality and part of the Dolby Atmos mixing workflow. But first, let's have a quick look at that "Compatibility Button" before we discover other major obstacles down the road.

2 - Renderer to the Rescue

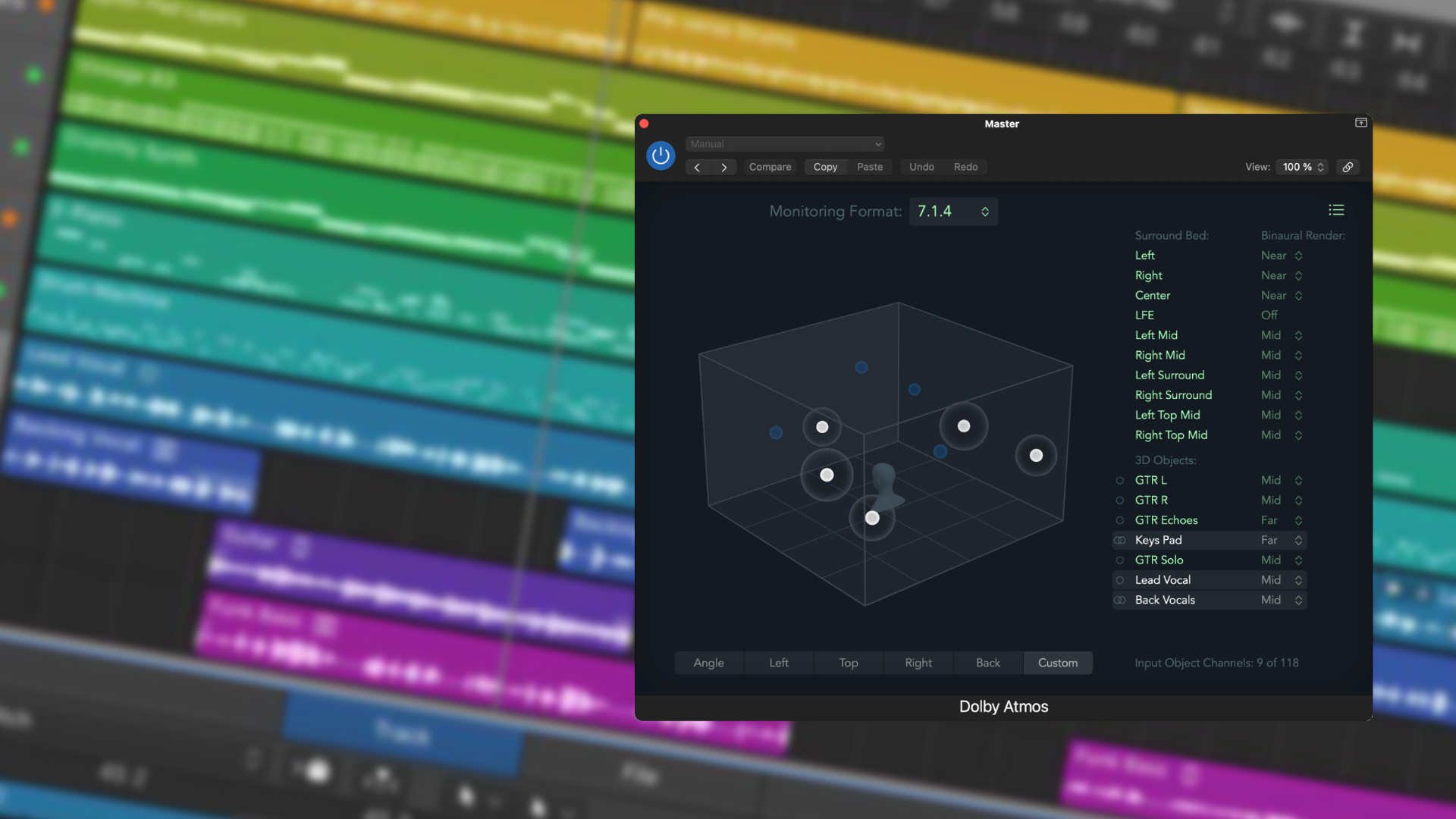

The heart of an object-based audio format, like Dolby Atmos, is the Renderer. A Dolby Atmos mix doesn't have a mix bus with a specific channel width. Instead, up to 128 audio signals are stored separately, and the position in space (XYZ) is also kept separate as metadata for each signal.

That Dolby Atmos mix (the individual audio signals and the corresponding metadata) is used by the Renderer to produce (process) any channel-based audio format (i.e., 7.1.2, 5.1, 7.1, stereo, binaural, etc.) on its output. Don't confuse that with any upmix or downmix procedure that just converts a specific channel-based format to a different channel-based format.

Any consumer Dolby Atmos playback device has that same Renderer built-in. It takes the Dolby Atmos file from your streaming service (that contains the separate audio signals and their metadata) and outputs (renders) a specific channel-based audio signal. That is the same Renderer engine that you have available in your standalone Dolby Atmos Renderer app (or integrated in your DAW like Logic Pro or Nuendo) that you are using when mixing. Unlike a fixed channel-based output on a consumer Atmos playback device, your app lets you select the output format, and that is your "Compatibility Button". You might have a 7.1.4 speaker layout in your studio, and you set the Renderer to that channel-based output. But, at any time, you can switch the Renderer, for example, to 5.1 to listen to how the mix is rendered on a 5.1 system when the height channels are folded down to the surround channels. Or you can even select 2.0, so listen to a 1-dimensional stereo rendered version of your 3D Atmos mix. And, let's not forget, the binaural monitoring option, with trouble on the horizon.

3 - Binaural Renderer with different Modes

Besides all the various channel-based playback formats like stereo, quad, 5.1, 7.1, 7.1.4, and all the above, there is one magic output format called Binaural Audio. That technology is nothing new and has lived in the shadow of audio production for decades. Its magical powers are to deliver a true 3-dimensional sound experience on two channels when listened to over headphones. Two things happened in recent years that put this format in the front seat; the move to immersive sound production and audio consumption, plus the change in consumer habits to listen to audio mainly over headphones. Although this is not so important for mixing Atmos for film, for Dolby Atmos Music, on the other hand, this becomes extremely important to check a mix in Binaural Mode during the Atmos mixing process.

Luckily, that is not a problem because the Dolby Atmos Renderer (or the integrated DAW version) provides that monitoring option. Not only that, you can fine-tune the Binaural Audio experience by setting each audio channel to one of four Binaural Render Modes that represent different HRTF models (think of different room reflections). With these settings, you can make sure that the binaural headphone mix sounds as close as possible to your 7.1.4 speaker playback. However, there is some hidden fine print you have to be aware of, or your dream of having the binaural mix sounding as close as possible to the speaker playback could turn into a nightmare when listening back on a streaming service.

The troublemaker is a company that starts with an "A", and no, this time it is not Avid.

4 - What Happens After My Mix?

With a standard stereo mix, the distribution chains are well known and established. When your mix leaves your studio or the mastering studio, it goes to the vinyl or CD production or gets uploaded to the streaming services. There are some steps that could affect your mix, either due to physical restriction with vinyl or when digital distribution uses lossy compression.

With the distribution of a Dolby Atmos mix, the nerd level is turned up quite a bit. Unfortunately, we have to educate ourselves or at least be aware of what is happening to understand what will happen to our Atmos mix when it "arrives" at the consumer. Here is just a quick overview.

- The Dolby Atmos mix that you deliver in the form of an ADM BWF file will be encoded for playback.

- There are two different codecs that are used by streaming services, "DD+JOC" and "AC4-IMS".

- DD+JOC is the older codec that was designed for speaker playback of Dolby Atmos film content. It ignores all the Binaural Render Mode settings of your mix!

- AC4-IMS is the newer codec that is optimised specifically for binaural playback and uses the Binaural Render Mode settings that you configured in your mix.

- Tidal and Amazon use the AC4-IMS codec for headphone playback on mobile devices and the DD+JOC codec only for speaker playback.

- Apple Music uses only the DD+JOC codec which means, all the Dolby Atmos Music mixes on Apple Music don't use any of the Binaural Render Modes that were part of your original Dolby Atmos mix !!!

- Keep in mind that streaming services constantly change their settings so this information is valid as of November 2021.

5 - Apple's "Spatial Audio" Mess

Ok, we have isolated the bad guy as Apple for getting rid of our Binaural Render Mode settings and, therefore, distributing a Dolby Atmos mix on Apple Music that is different from the binaural mix that we set in the studio. However, keep in mind that it is not Apple's fault that the DD+JOC codec removes the Binaural Render Modes; it is a Dolby codec. It is only Apple's fault that they chose not to use the newer AC4-IMS codec that would contain the binaural metadata. There is speculation as to why they made that choice.

However, we can't let Apple off the hook yet, because they threw another curveball in the mix, and that is called "Spatial Audio". It is a mess all by itself, and I get into more details in my book "Mixing in Dolby Atmos - #1 How it Works". Here are the important facts that you have to be aware of:

- Apple is using their own Renderer called "Spatial Audio" to playback Dolby Atmos mixes that are delivered to your Apple device as a DD+JOC codec.

- Any Dolby Atmos mix that you listen to on Apple Music is played back by Apple's own Renderer and does not (!) use the Dolby Renderer that you are using when monitoring your Dolby Atmos during mixing.

- The only exception is when playing back Apple Music content on the AppleTV through the HDMI output connected to a Dolby Atmos capable AV receiver or soundbar. In that case, you are listening to the Dolby Renderer and not the Apple Renderer.

- Apple is using the original Dolby Atmos mix in the form of the encoded DD+JOC file, but their own Renderer, the spatial audio engine, creates a Headphone Virtualization (their form of Binaural Audio) and Speaker Virtualization (for playing on their supported iPads and Macs). It is more like a "spatial interpretation" of your Dolby Atmos mix, and there is no "Apple Spatial Audio Emulation" button that you can enable while creating your Dolby Atmos mix. Bottom line, you are mixing blind for Apple Music because you don't know how your Atmos mix will sound.

6 - The Problem

Hopefully, by now, you get the idea of the major problems we are facing to create a Dolby Atmos music mix. The actual mix available on the streaming services is different from what we monitor during the mix. So be aware of the following points:

- Mixing and monitoring on a proper speaker setup (7.1.4 or higher) in your studio is recommended and a good idea for accurate spatial positioning of your signals. However, virtually none of the end consumers actually have such a listening environment. Check out my other article, Can You Mix Dolby Atmos On Headphones?

- The common speaker playback solutions for Dolby Atmos for the end consumer are smart speakers and soundbars. However, the Dolby Atmos mixing environment has no option to monitor on those systems. Besides that fact, most studio acoustics are not even suitable for playing back these speakers that rely on room/ceiling reflections.

- To guarantee that your mix sounds good in the environment most users listen to (headphones), you have to check and monitor with headphones in Binaural Mode and make necessary adjustments with the Binaural Render Modes. However, as we have seen, that metadata is only used on streaming services that support the AC4-IMS codec (Tidal, Amazon) but not the DD+JOC codec (Apple Music).

- Apple and their "Spatial Audio" engine make it impossible to monitor your mix in the studio the way it is listened to by their 72 million subscribers.

7 - The Workaround

The Dolby Atmos mixing environment works fine for film mixes. The only variable between creation and consumption is the number of available speaker channels on the dubbing stage and movie or home theater. With Dolby Atmos Music, it seems that Dolby hasn't come up with the tools to enable engineers to monitor their songs properly. The additional Binaural Render option doesn't work as long as Apple uses the DD+JOC codec and their own (different) Spatial Audio renderer.

The lack of a real-time ‘After Codec Emulation’ monitoring option that lets you monitor through the Renderer after all the (DD+JOC or AC4-IMS) processing is a huge problem. Especially an option to connect such an output directly to a consumer AV receiver or smart speaker would provide a better real-life monitoring check. Some studios already use expensive Dante-equipped AVRs to playback consumer content back to their studio speaker setup. Dolby already has the "Spatial Coding Emulation" (part of the DD+JOC codec) that lets you monitor with that coding step, so why not the entire DD+JOC and Apple Spatial Audio emulation? Maybe the reasons is that they don't want to share the secret sauce in Apple's Spatial Audio. Maybe a future version of Logic Pro will be the first DAW that includes that "Apple Spatial Audio Monitoring" feature because the secret would stay in the family?

The only "band aid" solution that the Dolby Atmos Renderer provides is an MP4 export of the Dolby Atmos Master File. The MP4 file functions as a wrapper that contains the DD+JOC encoded mix that can be played back on consumer devices. However, this is an offline solution that requires multiple steps with various degrees of IT knowledge that might exceed the comfort zone of many sound engineers.

The solution for the mp4 playback on the iPhone is as close as it gets to hear your mix through Apple's Spatial Audio engine, but again, many steps with many variables that Apple is constantly changing. Just the implementation of Spatial Audio itself on the iPhone is so terrible and not intuitive at all that it constantly gets your frustration level up.

8 - The (clumsy) Solution

Here are some procedures on how to playback the mp4 file on consumer devices:

- iPhone: Move the MP4 file to your iPhone via AirDrop or iCloud and play it back on your iPhone (iOS 15.1) from the Files app via AirPods Pro/Max. Spatial Audio has to be enabled on the iPhone.

- USB stick: Load the MP4 file onto an exFAT formatted USB stick. If your Dolby-enabled playback device, for example, a Blu-ray Player or AV Receiver, has a USB slot, then you can use it to playback your mix through your system.

- Plex: Playback via the Plex server application from a Mac or Windows computer to the Plex Player application on an AppleTV4k connected via HDMI to an Atmos-enabled AVR.

- HLS: Stream from AWS storage packaged as Apple HLS via a Safari browser and “pushed” to an AppleTV4k using Airplay connected via HDMI to an Atmos-enabled AVR.

- HLS Browser: Stream from a Mac or Windows computer configured as an HTTP server with playback of HLS packaged .m3u8 via a Safari browser “pushed” to an AppleTV4k using Airplay.

- One more: Use MPEG-Dash packaged .mpd from the VLC application to a Google Chromecast Ultra4k connected via HDMI to an Atmos-enabled AVR.

9 - The Detailed iPhone Procedure

Here is the detailed step-by-step procedure for playing back the mp4 exported file on an iPhone to listen through the Spatial Audio engine (there are a few variations for some steps).

The part of the procedure generates the MP4 file and gets it to the iPhone:

- Step 1 - Open the DAMF or ADM BWF file in the Dolby Atmos Renderer.

- Step 2 - Choose the menu command "File > Export Audio > MP4".

- Step 3 - A dialog opens that lets you configure the export. Select Music, No video, and click Export

- Step 4 - The MP4 file will be created in the designated folder on your computer.

- Step 5 - Right-click on the file to open the contextual menu where you can verify the parameters Kind:

"MPEG-4 movie", Codecs: "Dolby Digital Plus", Audio channels: "6".

- Step 6 - On the contextual menu, select "Share > AirDrop" to open the AirDrop window.

- Step 7 - Select the iPhone that you want to send the file to.

- Step 8 - On the iPhone, accept the AirDrop, and on the next dialog, select "Open with Files".

- Step 9 - Save the file to the Downloads folder of the iCloud Drive.

- Step 10 - Open the Files app on your iPhone and select the mp4 file, which opens the player that lets you playback that file. But before you do that, you have seven additional steps to make sure that Spatial Audio is enabled.

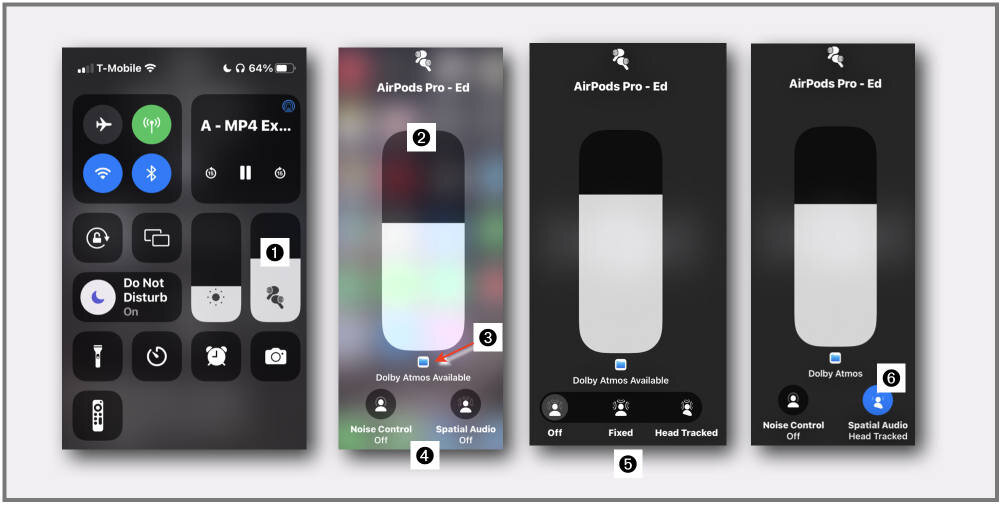

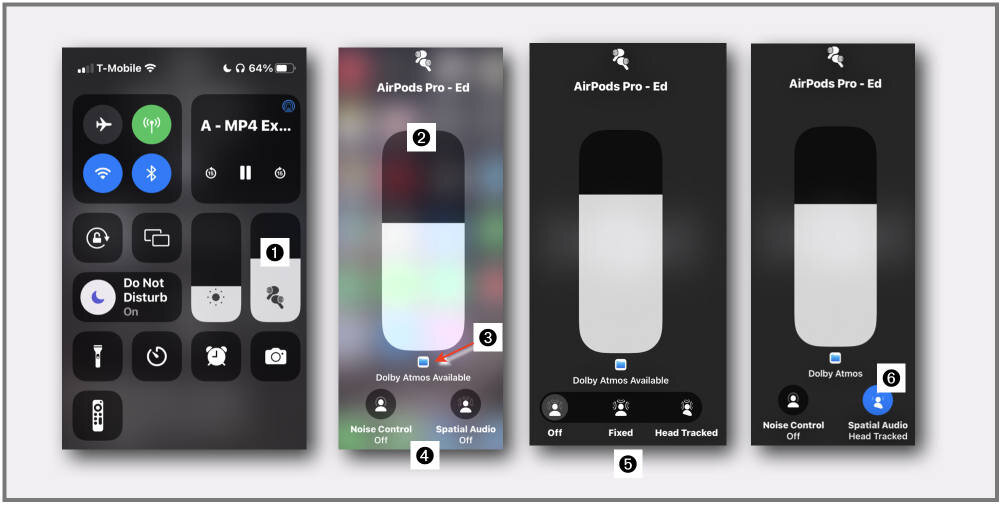

View fullsize

This is the second part of the procedure to configure the iPhone in iOS 15.1.

- Step 1: Make sure you have the AirPods Pro or any other Spatial Audio compatible AirPods connected that you can see on the Control Center by the icon on the volume slider.

- Step 2: Tap-hold on the volume slider to open the big volume slider screen

- Step 3: The screen shows the icon for the Files app and the label "Dolby Atmos Available" to indicate that you have a Dolby Atmos file (your mp4 exported DD+JOC file) ready to play from the Files app.

- Step 4: The two buttons below let you configure the Noise Cancelation (set your preference) and Spatial Audio. Tap on the Spatial Audio button.

- Step 5: Now, three options for Spatial Audio appear. Select either "Head Tracking" that enables Spatial Audio with Head Tracking or "Fixed" for Spatial Audio without Head Tracking.

- Step 6: Once you make a selection, the three buttons disappear, and the selected button remains blue (enabled).

- Step 7: Tap on the background to close the screen and tap again to close the Control Center.

Now the iPhone has Spatial Audio enabled, and you can listen to your Atmos mix (the MP4 file) playing through Apple's Spatial Audio engine, the way it would sound when that mix is later played back from the Apple Music streaming service.

Keep in mind that this monitor procedure is offline, and every time you make adjustments in your mix, you have to go through the procedure again. This workflow falls under the category, "you must be kidding me".

10 - Listening Test

Here are two examples if you want to test this out. I created a Dolby Atmos mix where I recorded my voice announcing the 11 channel positions of a 7.1.4 setup one at a time, panned to that position in space. I repeat each position announcement four times with the corresponding Binaural Render Mode setting "Left BRM off", "Left BRM near", "Left BRM Mid", Left BRM Far", so you can hear the effect of the different Binarual Render Mode settings:

Atmos Binaural Render Modes Test - Binaural Bounce

Download

- File 1 in the audio player above is the 2-channel audio file of the Binaurally Rendered version. Listen with your headphone (Spatial Audio disabled!) to hear the effect of the four BRM settings.

- File 2 (22MB): This is the MP4 exported file of that ADM BWF file. Listen on your iPhone with Spatial Audio enabled. You will hear that the four versions of each position sound the same because the BRM are stripped, and Apple's Spatial Audio engine replaced them with a single setting for all four. That would be the Apple Music version.

- There is also the original ADM BWF file. This is a 1.8Gb download. If you would like a copy please contact me directly at Ding Ding Music and I’ll arrange a link to the file for you. You can open it in the Dolby Atmos Renderer App (or Atmos-enabled DAW) to conduct your own tests.

#11 - The Future

Even if you know that the Binaural Render Modes are ignored on Apple Music and replaced with their own engine, it becomes a guessing game what setting you should choose for your mix. Some suggest leaving all audio channels at the "Mid " setting as the closest option. However, for the other services that use that metadata, you want to tweak the settings to create the best mix possible.

This is a terrible mixing workflow where you have to maintain two BRM settings. Was this acknowledged and signed off during negotiations between Apple and Dolby? For whatever reasons, we can only hope that the current situation is only a work-in-progress transition. However, it is up to the audio community to let Dolby and Apple know that this is an unacceptable situation that compromises the quality of the very content they provide to their consumers.

12 - The Conspiracy

And last but not least, some thoughts about why we are in this Atmos monitoring mess in the first place. Why did Dolby allow Apple to do their own thing? Once you saw Apple's strange press release "... with support for Dolby Atmos", it was clear that something was up. They could have said, "Playback of Dolby Atmos mixes". It is clear by now that Apple didn't allow for the original Dolby Atmos Music mix to be played back on their devices. They developed their own immersive audio technology for quite some time with their own renderer called Spatial Audio and just allowed Dolby to run their Atmos codec through Apple's engine. Remember that Spatial Audio can use any surround format (5.1 or 7.1) and even a stereo file to be processed through Apple's Spatial Audio engine to create an immersive Headphone Virtualization or Speaker Virtualization. Maybe in the future, they will also "support" the Sony 360 Reality Audio format that is already available on other streaming services.

Dolby Atmos only seems a part of Apple's greater Spatial Audio strategy. FaceTime calls already support immersive audio virtualization. Head Tracking is already working in Spatial Audio due to their built-in AirPods technology without the need for clumsy add-ons to clip on your headphones. And there are the rumoured augmented reality glasses. Any of these AR VR MR technologies work best when the audio part is also a convincing 3D binaural experience.

In conclusion, the monitoring "challenges" we are currently facing with Dolby Atmos might be just growing pains of a bigger shift that definitely happens with the further development of immersive audio and next- generation audio. However, instead of turning your back on that not-yet-matured technology, ask yourself if you can afford to exclude yourself and not be part of that bumpy ride into the future audio technology.

Further Reading

I hope you find the information in this article useful. If you want to learn more about Dolby Atmos, please check out my two books "Mixing in Dolby Atmos - #1 How it Works" and "Logic Pro - What's New in 10.7" with detailed explanations of the Atmos integration in Logic Pro.

The books are available as pdf, iBook, Kindle, and printed book with all the links on my website.